Design and Implementation of a Multispectral Imaging System

The goal of the project is to build a multispectral imaging system using devices such as a high sensitive camera, a tunable filter, a monochromator and a spectroradiometer. The project consists of

- the design of the mesurement system,

- integration of each of the devices in the common framework,

- and implementation of the measurement system.

During the master thesis the work will be finalized with

- the callibration of the multispectral imaging system,

- and verifications of the measurements.

Project Group

| Advisors | Members |

|---|---|

| Philipp Ziemer |

Design of a Multispectral Imaging System

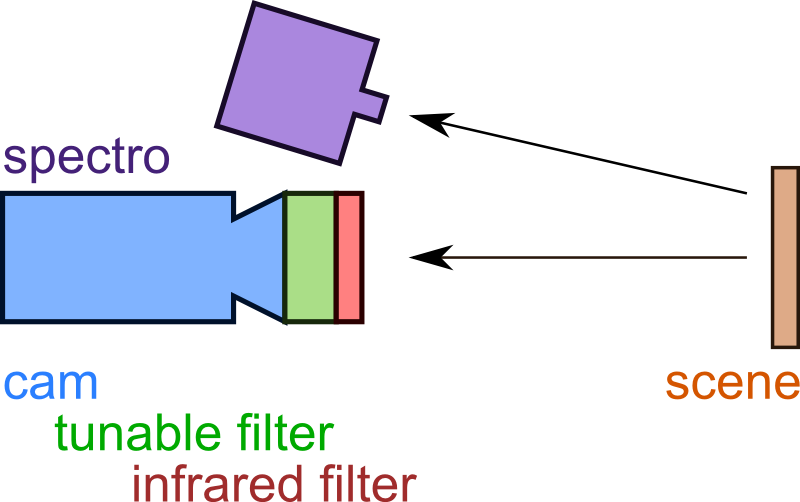

In order to understand which technologies the project required, the theoretical design of the multispectral imaging system had to be evaluated and understood. To acquire multispectral images, the same hardware as for regular pictures is used together with advanced filtering [1][2][3]. The difference to regular imaging is that the system has more than three channels. The multispectral imaging system used in this experiment consisted of a number of different devices and an optional infrared blocking filter.

The setup can be seen in the following image. A high resolution 14bit cooled CCD camera (blue) is used to acquire scenes. For the fi ltering of the di fferent channels a fast tunable liquid crystal fi lter is used (green). This fi lter will be mounted in front of the camera lens. Further a telespectroradiometer (purple) is used to calibrate the system together with an integrated sphere. Eventually it will also be used for the recording of scene references for data normalization. The calibration process and final setup will be part of my master thesis. The following figure shows the setup of the devices during acquisition of a multispectral image of a scene as it currently is.

Implementation of the Devices in the iOS Framework

After the initial design, the hardware and technologies had to be understood beginning with the camera. To test the camera, the basic software delivered with the device was used. After small first steps containing the camera setup and image acquisition for later comparison, the documentation and the API had to be analyzed [5].

The API included with the camera was complicated to handle. Since it was initially designed for Windows, only an incomplete and faulty implementation for Linux was available. This had to be adapted and debugged in order to work with our system. This was done mainly by Roman Byshko. After the API worked correctly, a script was programmed to test it. This script was the first step to understand the API. The first test included initialization, initial readout of the camera settings, and later on basic image acquisition without advanced settings, e.g. exposure control. Since the API contained principles which were new to me, the process understanding it and learning the basic functionaility took some time. After this step was achieved, the script was adapted and included in the iOS framework.

The iOS framework is the common framework of the institute for optical system of the HTWG Konstanz (iOS homepage). In it all the devices of the institute are included to be able to combine them whenver they are used together. The tuneable filter was already implemented with the required functionality. The framework is implemented using the Qt framework and CMake. The documentation is written in Doxygen. The pco.camera 4000 had to be included by me. After a few months of work, finally the following widgets are available in the framework:

- automatic initialization of all devices,

- taking single images or looping image sequences,

- preview of images acquired by the camera,

- saving images to 16 bit Tiff files for post-processing using Libtiff,

- and a sequencer tool for automatic acquisition of images under given exposure range and filter stepping.

In order to post-process images, an optimal exposure control had to be chosen. For this cause I evaluated some literature including one shot optimal exposure control and exposure control using brightness histograms [6]. These technologies did not seem to serve our purpose well. I finally decided to stick to High Dynamic Range (HDR) images, because they maintain most information of the original scene. The functionality for recording an image sequence under different exposures is included in the sequencer tool. The image sequences with different exposure steppings recorded for one wavelength will be post-processed through combination of the Low Dynamic Range (LDR) images to an HDR image according to [4] in Matlab. Finally tests for optimal settings will be conducted during the master thesis.

Used Technolgies

The following list provides an overview of the different technolgies which were priorly unknown to me and had to be learned during the project:

- theory behind multispectral images [1][2][3],

- setup of an multispectral imaging system [1][2][3],

- CMake (CMake project page),

- C++,

- Qt framework (Qt project page),

- Doxygen (Doxygen project page),

- Libtiff (Libtiff project page),

- HDR Imaging [4],

- and pco.camera 4000 API [5].

Future Work

The calibration and verification process will be part of my master thesis about this project.

Bibliography

- Jon Yngve Hardeberg

Multispectral Color Image Acquistion

Colour Imaging: Vision and Technology, 1999 - Jon Yngve Hardeberg

Multispectral Color Imaging

NORSIGnalet, 2001 - Hans Brettel, Jon Yngve Hardeberg and Francis Schmitt

Multispectral Image Capture Across the Web

Signal and Image Processing Department

Ecole Nationale Superieure des Telecommunications, Paris, France - Erik Reinhard, Greg Ward, Sumanta Pattanaik, Paul Debevec, Wolfgang Heidrich and Karol Myszkowski

High Dynamic Range Imaging: Acquisition, Display, and Image-Based Lighting

Morgan Kaufmann, 2nd edition, 2010 - David Ilstrup and Roberto Manduchi

One-Shot Optimal Exposure Control,

Computer Vision ECCV 2010, p. 200 – 213, 2010 - pco.imaging

Sensitive Cameras: pco.4000

pco.4000 homepage